🪖 How TikTok drags users into the digital war zone

*By: Kathy Meßmer and Martin Degeling

During the ongoing war between Israel and Hamas, social media users opening their feeds experience a melange of true and false information, state and terrorist propaganda, real and fake war footage, news and opinions. This mixture often contains extremely violent and disturbing content.

As different news outlets already described with regards to this violent conflict, various actors weaponize social media, including TikTok - quite similar to what we already know from other wars, such as the most recent one in Ukraine. That is why European Commissioner Thierry Breton warned TikTok - and other platforms - to follow EU rules on dealing with illegal content. Failure to comply could result in penalties.

Indeed, TikTok plays a crucial role in recommending war content to users, also in Germany. However, our preliminary research suggests it’s not (only) in the way that people usually think of TikTok - as an algorithmic juggernaut based on tracking users’ viewing preferences. It’s actually a bit messier than that.

“You may like” on TikTok

In the reporting on the latest escalation, outlets like the Washington Post refer to TikTok’s search suggestions on the search page as “trending searches” to demonstrate the topic’s relevance to users. But we have a different interpretation here because our research suggests that the search strings TikTok recommends to you might not necessarily be trending topics.

At SNV, we have been studying TikTok for several months now, and one feature caught our eye: the suggestions on what to search for. If you open the search page of TikTok (by clicking on the 🔎 icon), you not only get to see your previous search results but there is also a section called “You may like”. This section always contains ten search strings, three of which are usually highlighted by a 🔥 icon.

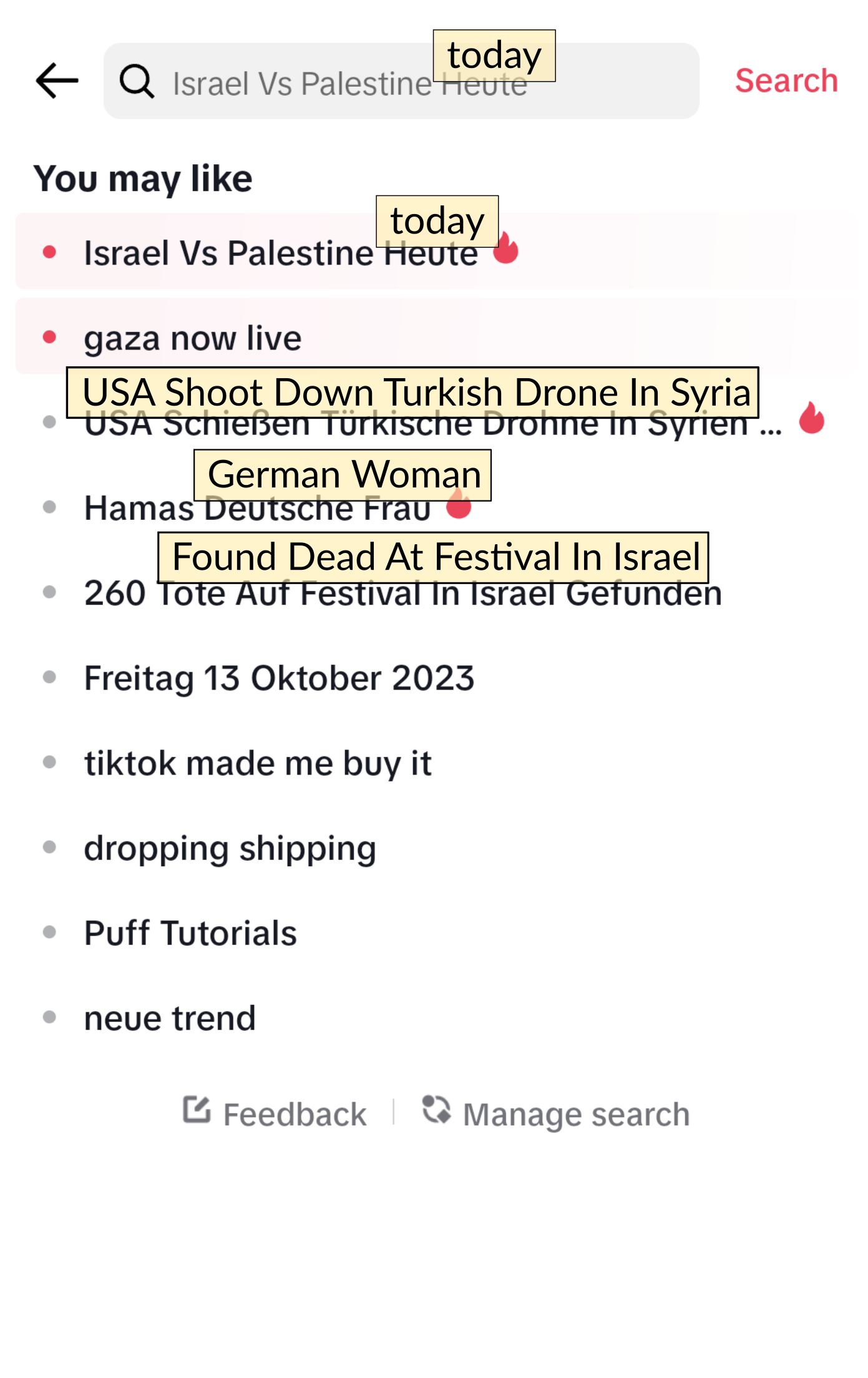

“You may like”, as shown to a German user on October 13, 2023. Personalized search suggestions were deactivated for this. Yellow boxes show the translated search terms.

What struck us the most over the last weeks is how irritating and badly curated these suggestions are. For example, they often refer to strings like “so-and-so died”, suggesting the death of a celebrity, who is, in fact, alive.

“You may like” suggested search as shown on October 10, 2023, suggesting that Chika, the rapper, has died. Yet, she seems to be in ok-ish health.

Also, these search suggestions come up with random topics, which were sometimes in the news days ago but don’t seem interesting to TikTok users.

“You may like” suggested search as shown on September 28, 2023, suggesting that Italy’s former president had died. This was actually true for a change, but he had already died one week earlier on the September 22 and his death was not a “trending topic” at the time anymore.

“You may like” suggested search as shown on September 5, 2023, which covers the funding of the Austrian consumer protection non-profit “Verein für Konsumenteninformation” (VKI). It was not a topic of debate in German news and didn’t strike us as something of importance for many TikTok users.

Oftentimes, when you click on these search suggestions and are directed to the results page, you will not find any related videos. For example, in this case, where the suggested search term referenced a very specific event in Austrian news, no video was available on the results page.

In contrast to TikTok’s For You Page, especially the 🔥 recommendations are less personalized. All of this brings us to the hypothesis that the recommender system of the Search Page is based not solely on what happens on the platform but considers external data, for instance, news headlines. And it is poorly curated.

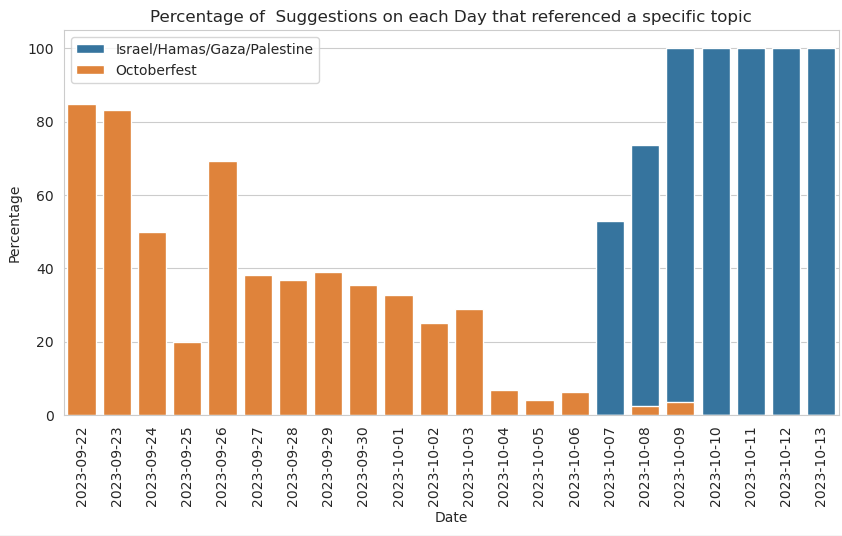

Why is this important? As long as the search suggestions are “Kotzhügel” (”Puke Hill”) or “Oktoberfest” - as it was during and after the Octoberfest season in Munich - this may be irritating and entertaining, but nothing to worry about.

This is a different story if search suggestions are replaced with much more violent and potentially traumatizing content. And that’s exactly what happened, as the following lists of the “You may like” suggestions show.

You May Like War

On October 3, 2023, we checked the suggested search topics around 40 times, and the top 10 suggested search terms we found in Germany were as follows:

- Rosie Oktoberfest

- Oktoberfest Kotzhügel

- Julienco Ayliva

- Chibi Doll Girl Outfit

- Van Gogh Pokemon

- Kaan Yavi Ex Frau

- Mexify Mutter Tot Hamed

- Köln Marathon 2023

- Frankfurt Verliert 0:2 In Wolfsburg

- From Satan Ist To Islam

On October 7, 2023, the day of the attack on Israel, the top 10 “You may like” suggestions were as follows:

- Italien Bus Unfall

- Israel Angriff Heute

- Israel Angriff Heute Live

- Israel Gaza Heute

- Israel Heute Live

- Putin Geburtstag 2023

- Israel October 2023

- Murcia Spain Fire

- Osnabrück Düsseldorf

- Palestina Israel

Since then, search suggestions mentioning Israel, Gaza or Palestine are part of the “You may like” section on a daily basis. Furthermore, these more broad search suggestions are accompanied by ones like “Shani Louk Full Video”, referring to the German missing person Shani Louk, who was kidnapped by Hamas during a music festival. Videos of her dancing and footage of her lying unconscious on a pickup truck went viral.

Image: The plot shows the percentage of “You may like” lists per day containing at least one of the topics. Oktoberfest ended on October 3 and had been frequently suggested before. The most recent conflict between Israel and Hamas has been on every suggested list since the initial terror attacks on October 7.

Why the “You may like” Section is Problematic

With our understanding mentioned above, the “you may like” section seems to be automatically compiled by TikTok, at least partly without connection to users’ behavior or interests. But if the suggestions are not due to individual platform-user interaction, it cannot be argued that users get to see what interests them. Rather, regardless of their interests, TikTok users are dragged into the melange of true and false information as well as war propaganda by TikTok.

This might not be illegal but it is dangerous on several levels:

- Search suggestions like “Shani Louk Full Video” re-victimize affected people and lead users to dehumanising and disturbing content.

- TikTok uses victims and violence to drive engagement and, ultimately, revenue.

- Serious problems for users’ mental health could ensue, who can be (re-)traumatized by just clicking on the search suggestions.

- This poses a grave risk to public discourse by encouraging propagandists and free riders using paid ads to appear in the search results and aiming for engagement.

Once you click on one of those suggested search strings, you get redirected to a results page. Although the terms are in German (due to our sock puppet being logged in in Germany as a German account), the results shown are from around the world and span different languages. The videos one sees range from disturbing violent material to Imams talking about the end of the world to people praying for Israel. Most of those videos are provided without any context or explanations and will likely be hard to understand for many German users. Disturbingly enough for people of every age group, this also poses a special risk to TikTok’s relatively young user base, since Gen Z is known to use TikTok as a search engine and news source.

So, while TikTok brags about not having political ads on the platform (which is not entirely true, as we have shown here), it drags users into one of the most violent, controversial and complicated political conflicts of our times by suggesting to search for these topics and not providing any context or help.

Enjoy Reading This Article?

Here are some more articles you might like to read next: